Algorithms learn self-punishment schemes to keep supracompetitive prices

Algorithms learn self-punishment schemes to keep supracompetitive prices

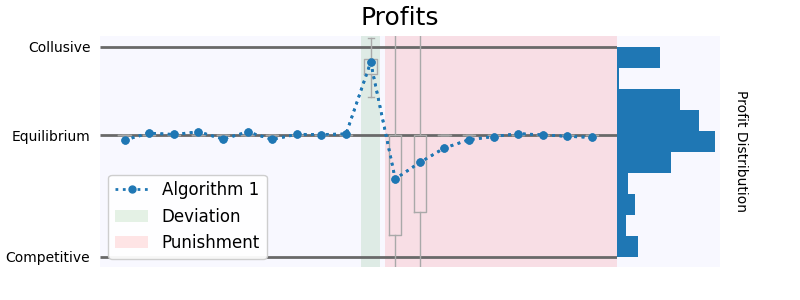

Reinforcement learning algorithms are gradually replacing humans in many decision-making processes, such as pricing in high-frequency markets. Recent studies on algorithmic pricing have shown that algorithms can learn sophisticated grim-trigger strategies with the intent of keeping supra-competitive prices. This paper focuses on algorithmic collusion detection. One frequent suggestion is to look at the inputs of the strategies, for example at whether the algorithms condition their prices on previous competitors’ prices. The first part of the paper shows that this approach might not be sufficient to detect collusion since the algorithms can learn reward-punishment schemes that are fully independent of the rival’s actions. The mechanism that ensures the stability of supra-competitive prices is self-punishment.

The second part of the paper explores a novel test for algorithmic collusion detection. The test builds on the intuition that as algorithms are able to learn to collude, they might be able to learn to exploit collusive strategies. In fact, since they are not designed to learn sub-game perfect equilibrium strategies, there is the possibility that their strategies could be exploited. When one algorithm is unilaterally retrained, keeping the collusive strategies of its competitor fixed, it learns more profitable strategies. Usually, these strategies are more competitive, but not always. Since this change in strategies happens only when algorithms are colluding, retraining can be used as a test to detect algorithmic collusion.

To make the test implementable, the last part of the paper studies whether one could get the same insights on collusive behavior using only observational data, from a single algorithm. The result is a unilateral empirical test for algorithmic collusion that does not require any assumptions neither on the algorithms themselves nor on the underlying environment. The key insight is that algorithms, during their learning phase, produce natural experiments that allow an observer to estimate their behavior in counterfactual scenarios. The simulations show that, at least in a controlled experimental setting, the test is extremely successful in detecting algorithmic collusion.